Imagine you have a python application which is used psycopg2package.

To install this package you need to have libpq-dev system library as well as a C compiler installed. (Yes you can install psycopg2-binary without problems, but it doesn't really matter which library to choose as an example).

Your Dockerfile might look similar to this. (I use venv to help with multistage build later)

FROM python:3.9.6-slim-buster

# I create venv outside the workdir

# so even if we mount local folder to docker

# it won't be affected.

RUN python3 -m venv /opt/.venv

# ensure that virtualenv will be active

ENV PATH="/opt/.venv/bin:$PATH"

RUN apt-get update && \

apt-get upgrade -y && \

apt-get -y install --no-install-recommends libpq-dev build-essential && \

rm -rf /var/lib/apt/lists/*

# Install dependencies:

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY main.py .

CMD ["python", "main.py"]import psycopg2

# Connect to your postgres DB

conn = psycopg2.connect(dbname="test",

user="postgres",

password="secret",

host="db")

# Open a cursor to perform database operations

cur = conn.cursor()

cur.execute("SELECT now();")psycopg2==2.9.1If you run docker build -t multistage . the image will be around 347MB.

Actually we don't need `build-essential` for our app, but we have to keep it because of docker layered filesystem. Maybe multistage approach will help? It definitely will! Let's take a look

FROM python:3.9.6-slim-buster AS build-base

RUN python3 -m venv /opt/.venv

# ensure that virtualenv will be active

ENV PATH="/opt/.venv/bin:$PATH"

RUN apt-get update && \

apt-get upgrade -y && \

apt-get -y install --no-install-recommends libpq-dev build-essential && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

# Install dependencies:

COPY requirements.txt .

RUN pip install -r requirements.txt

FROM python:3.9.6-slim-buster AS release

WORKDIR /code/

ENV PATH="/opt/.venv/bin:$PATH"

# Copy only virtualenv with all packages

COPY --from=build-base /opt/.venv /opt/.venv

# Run the application:

COPY main.py .

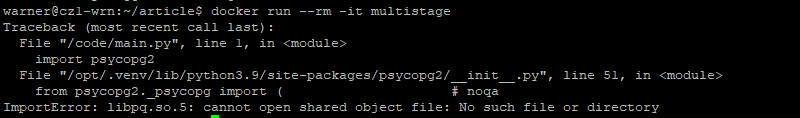

CMD ["python", "main.py"]The new image takes only 128 mb! The only problem - it doesn't work

What is libpq.so.5 ? It's a shared library. It's a piece of C code from postgresql driver which psycopg2 uses under the hood (simular to .dll files in Windows) To get it we need to install libpq5 system library via apt-get install

So how one can find such dependencies? The idea is simple.

- Scan all files inside our virtualenv and find all

.soand executable files. (because its the only files which can relate to other shared libraries) - use

lddcommand to understand which shared libraries are used by such files - with

dpkg -Swe can know the name of the system package contains needed shared library. (dpkg is for debian-based images, but other disctos has its own simular commands)

I created a python script with all this deps

import stat

import subprocess

import sys

from pathlib import Path

from typing import List, Optional, Generator

EXECUTABLE_PERMISSIONS = stat.S_IXUSR | stat.S_IXGRP | stat.S_IXOTH

def is_executable(filepath: Path) -> bool:

return bool(filepath.stat().st_mode & EXECUTABLE_PERMISSIONS)

def find_all_executable_or_so_libs(venv_dir: Path) -> List[Path]:

executable_files = set()

for f in venv_dir.rglob('*'):

if f.is_dir():

continue

if f.name.endswith('.so') or is_executable(f):

executable_files.add(f)

return sorted(list(executable_files))

def extract_lib_paths(dynamic_str: bytes) -> Optional[str]:

"""

>>> extract_lib_paths(b"linux-vdso.so.1 (0x00007ffee695e000)")

>>> extract_lib_paths(b"libpthread.so.0 => /usr/lib/libpthread.so.0 (0x00007f1475154000)")

'/usr/lib/libpthread.so.0'

>>> extract_lib_paths(b"libpthread.so.0 => libpthread.so.0 (0x00007f1475154000)")

"""

if b'=>' not in dynamic_str:

return

decoded_path = dynamic_str.decode(encoding='utf-8').strip()

dyn_lib_path = decoded_path.split()[2].strip()

if '/' not in dyn_lib_path:

return

return dyn_lib_path

def find_linked_libs(filepaths: List[Path]) -> List[str]:

result = set()

for interesting_file in filepaths:

p = subprocess.Popen(['ldd', interesting_file.absolute()], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

for ln in p.stdout:

lib_path = extract_lib_paths(ln)

if lib_path:

result.add(lib_path)

return sorted(list(result))

def who_owns_debian(lib_path: str) -> Generator[str, None, None]:

p = subprocess.Popen(['dpkg', '-S', lib_path], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

lines = p.stdout.readlines()

for line in lines:

line = line.decode('utf-8')

if '/' in line and ':' in line:

package_name = line.split(':')[0]

yield package_name

def collect_package_names_debian(shared_libs_paths: List[str]):

total_names = set()

for one_lib_path in shared_libs_paths:

for pkg_name in who_owns_debian(one_lib_path):

total_names.add(pkg_name) if pkg_name else None

return sorted(list(total_names))

def main(source_dir):

source_dir = Path(source_dir)

interesting_files = find_all_executable_or_so_libs(source_dir)

shared_libs = find_linked_libs(interesting_files)

all_names = collect_package_names_debian(shared_libs)

for name in all_names:

print(name)

if __name__ == '__main__':

main(sys.argv[1])

For other distros you only need to change who_owns function to appropriate command and result parsing

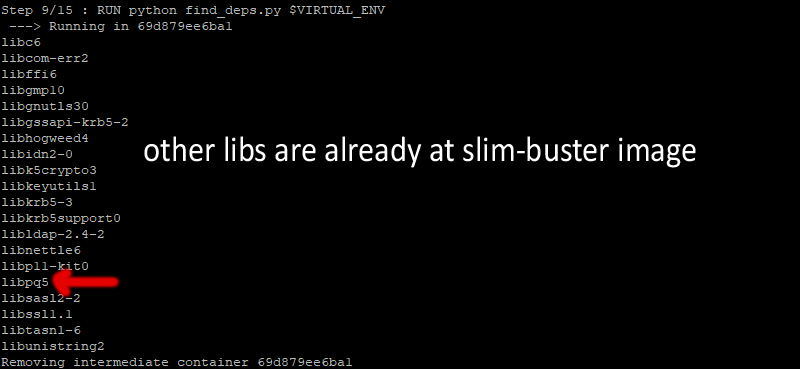

python find_deps.py /opt/.venv - be sure not to remove any build-dependencies before that because it will affect result.

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY find_deps.py find_deps.py

RUN python find_deps.py $VIRTUAL_ENV

You can save output as a file

RUN python find_deps.py $VIRTUAL_ENV > sys_deps.txt

And during next stage use automatically install them like so:

COPY --from=build-base /sys_deps.txt /sys_deps.txt

RUN cat /sys_deps.txt | xargs apt-get install -yBut I prefer to place it by hand. Final multistage dockerfile is bellow

FROM python:3.9.6-slim-buster AS build-base

ENV VIRTUAL_ENV=/opt/.venv

RUN python3 -m venv $VIRTUAL_ENV

# ensure that virtualenv will be active

ENV PATH="$VIRTUAL_ENV/bin:$PATH"

RUN apt-get update && \

apt-get upgrade -y && \

apt-get -y install --no-install-recommends libpq-dev build-essential && \

rm -rf /var/lib/apt/lists/*

# Install dependencies:

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY find_deps.py find_deps.py

RUN python find_deps.py $VIRTUAL_ENV

FROM python:3.9.6-slim-buster AS release

WORKDIR /code/

ENV PATH="/opt/.venv/bin:$PATH"

# install system dependencies

RUN apt-get update && \

apt-get upgrade -y && \

apt-get -y install --no-install-recommends libpq5 && \

rm -rf /var/lib/apt/lists/*

COPY --from=build-base /opt/.venv /opt/.venv

# Run the application:

COPY main.py .

CMD ["python", "main.py"]| Step | Image size | Working app? |

|---|---|---|

| Full build | 347 MB | YES |

| Multistage (only venv) | 128 MB | NO |

| Multistage (venv + system deps) | 138 MB | YES |

I think it is worth the trouble.